The seismic restructuring of data-driven practices triggered by the dawning of big data is best understood retrospectively. Suddenly, organizations were contending with myriad data structures, formats, and distributed, geographical data source locations in time frames and quantities overwhelming the capacity of existing systems.

Of equal importance was the ascendance of several supplementary technologies flourishing in the wake of the big data era, placing previously undue emphasis on social, mobile and cloud platforms. The resulting infrastructure, architecture, and IT challenges indicated a paradigm shift of the entire data environment dictated by an onset of forces altering the very manner in which business was conducted.

Largely due to the rapid nature of this transformation and the immediacy of its demands, many organizations searched for the best solutions in the market. An abundance of point solutions were conceived to address large-scale, systemic changes to the data landscape. These piecemeal approaches provided limited value for the short term, yet frequently ended up more costly in the long run due to vendor lock-in and evolving business requirements.

Furthermore, immediate reactions requiring different tools for each facet of managing big data oftentimes complicated architecture while protracting time to value. The fundamental flaw in this method is that such tools are not expressly designed for big data, which naturally limits their worth in the wake of the big data revolution.

Big data’s influx pointed to a series of cross-industry factors producing innovations in the way data themselves are harnessed, from initial ingestion to analytics. These ubiquitous market forces were instrumental in requiring a comprehensive approach, designed for big data technologies, for each respective aspect of the data management process.

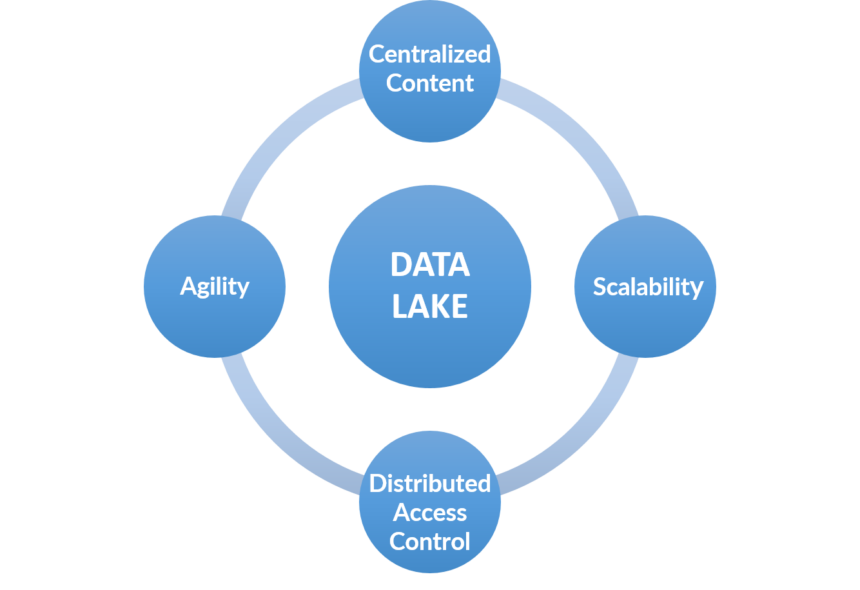

The large volumes of data has created the need for a centralized platform accounting for every aspect of data-driven practices today—and tomorrow—best realized in the form of an end-user governed, self-service Smart Data Lake.

Ubiquitous Market Forces

Understanding the nature of the market forces responsible for reshaping the data environment requires analyzing them in technological and non-technological terms. Of the former, the reliance on SMAC (Social, Mobile, Analytics and Cloud) represents the nexus of forces most determinant of the means of accessing big data. These technologies have profoundly influenced the shape and form of big data’s ingestion for the enterprise. Their most notable effect is perhaps the unprecedented value they’ve created with external data, which in turn facilitates much greater emphasis on the integration of such data with internal data. Similarly, they’re responsible for the prominence of multi-structured data and the complications of their inherent worth to the enterprise.

The novel intricacy wrought by such complicated data formats is uniformly eased by the streamlined architecture of a singular, centralized semantic platform. Specifically, the poly-structured format of the diversity of data sources and types is seamlessly merged via evolving semantic models linked together on an RDF graph. Positioned within this framework, all data elements are represented alongside each other in a standardized manner supplanting the need to manage disparate databases, data models, and schema for various structured data as mandated by traditional approaches. In such a singular platform, the architecture and underlying infrastructure is significantly simplified, decreasing costs accordingly.

Non-technological forces are typified by the accelerated pace of business and the extraordinary amounts of data parsed in these reduced time frames. The speed at which business is conducted is drastically influenced by the pervasiveness of the internet and the real-time responses it has ingrained in workflows. Compounding this expedience are other dictates of big data such as the current prevalence of sensor data, the rapidity of mobile communication, and the increased number of opportunities the synthesis of these factors is able to generate. The pivotal consideration in the impact of these forces is their temporary nature. Organizations have access to more opportunities, but they are also fleeting and require time-sensitive approaches to the utilization of data.

Comprehensive platforms account for these expedited temporal concerns in a manner which brings end users to the decision-making, analytics-based action stage much quicker than piecemeal approaches do. The singularity of the representations of individual nodes on a semantic graph suitably hastens time spent on adjusting schema and recalibrating models with other methods. The entire data preparation process, which can monopolize the time of the best data scientists or create inordinate reliance on IT for the most basic data-centric needs, is expedited. Users are able to dedicate much more time to data discovery and analytics, partaking of the speed at which modern business is enacted.

Redressing Conventional Issues

The aforementioned forces have shaped the data environment to result in the necessity of a centralized semantic platform widely due to issues of the increasingly tiered data management process. The influx of multi-structured data available from SMAC technologies in copious quantities delivered at rapid velocities can wreak havoc on the conventional domains of the data landscape which include: information governance, data preparation, data integration, search and discovery, business intelligence, and text analytics.

When accounting for these aspects of harnessing data in a silo manner representative of a short-sighted, point-solution methodology, it is easy to fall prey to vendor lock-in or costly updates producing substantial amounts of downtime. The greatest caveat for this approach is the lack of agility when business requirements or processes change, tasking organizations with restarting their means of fulfilling one of these six vital functions. Thus, organizations incur greater amounts of time when their systems are not able to produce value, while simultaneously being forced to spend more on system maintenance.

The core value proposition of the centralized approach is the holistic manner in which it implements all of these requisites of data use. By providing a necessary overlay to existing systems, this method is able to effect gains in both the short term and the long term. Immediate benefits include a greater degree of enterprise governance oversight facilitated in part through standardized modeling which, in most instances, encompasses all enterprise data. Subsequently, data provenance and data modeling are easier to account for and more readily available to trace, which accelerates integration attempts. The result is faster time to insight in adherence to organization-wide governance protocols with highly visible data lineage for increased trust in data assets.

Subsequent gains relate to the nature of that insight, which far outstrips that gleaned from point solutions. The linked data approach of semantic graphs is focused on relationship discernment between nodes, which facilitates a contextualization of seemingly unrelated data elements unparalleled by other technologies. Users are able to involve more of their data to identify relationships between them, and their use cases, which are otherwise undiscoverable.

Moreover, this linked data approach largely automates the data discovery process while provisioning exploratory analytics in which users can ask—and answer—as many questions as they can conceive on-the-fly. The results of analytics are singularly comprehensive, definitive, and all-encompassing. Achieving these objectives is difficult if not outright impossible with piecemeal approaches.

Anticipating Tomorrow

The most pressing marketing force cultivating the need for centralization is the ever widening influence of big data itself. Projections for the amount of data produced over the coming years reveals its expansion is nowhere near ceasing or even plateauing. When one considers the number of connected devices all endlessly producing data in the Internet of Things alongside advancements in augmented reality and virtual reality, as well as the availability of artificial intelligence options to account for such data, it becomes apparent that big data’s size, speed, and structures will only increase in the near future.

A centralized, graph-aware environment is prepared for these impending advancements in numerous ways. Using it as the basis for Hadoop or other data lake settings endows it with the scale and performance consistency required to continually deliver value in such workload-intensive, data-driven deployments. More importantly, it is a monolithic means of streamlining each component of short-term, point solutions not created for the demands of big data. This methodology is insufficient for the present and certainly not viable for the more stringent burdens of future applications of big data. Such a realization merely buttresses the notion that centralized, relationship-savvy semantic graph solutions represent the convergence of industry forces for competently managing data-centric needs.

Imperative Centralization

The transformative nature of big data can be seen everywhere data is deployed to increase business value. It’s growth can be attributed to accelerating business speeds, a new ecosystem of supporting technologies, and rapid advancements in the diversity of data types in the enterprise. It has all but single handedly set in motion market forces requiring a holistic means for managing each discreet component of transforming data to insight-based action. The impact of these forces is an eradication of the need to simply annex some additional tool to one’s existing infrastructure.

Instead, it mandates simplifying enterprise architecture, implementing cost-effective infrastructure utilitarian to the plethora of data types and technologies besieging the enterprise, and doing so with the oversight of organization-wide governance and provenance necessary for long-term reuse of data. Today’s market forces engendered the need for such holistic data management. Tomorrow’s makes it mandatory.