At the European Hadoop Summit in Brussels last week, SAP underlined its support for the fast-growing number of enterprise Hadoop deployments.

At the European Hadoop Summit in Brussels last week, SAP underlined its support for the fast-growing number of enterprise Hadoop deployments.

In the opening keynote, Forrester analyst Mike Gualtieri advised attendees that Hadoop was clearly ready for certain use cases in the enterprise, and that “adoption is the only option.”

In the opening keynote, Forrester analyst Mike Gualtieri advised attendees that Hadoop was clearly ready for certain use cases in the enterprise, and that “adoption is the only option.”

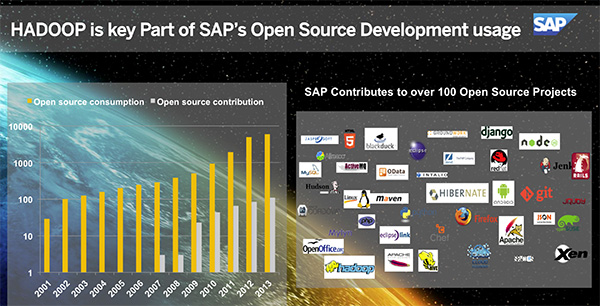

Irfan Khan, CTO for SAP Global Customer Operations, opened his keynote by emphasizing SAP’s open-source credentials, including contributions to over 100 different projects. For example, SAP has 50+ committers in the Eclipse community and has contributed more than 4.6 million lines of code.

Irfan explained that after more than forty years of working with large enterprises, SAP systems hold vast amounts of valuable business data — and there is a need to enrich this, bring context to it, using the kinds of data that is being stored in Hadoop:

Irfan explained that after more than forty years of working with large enterprises, SAP systems hold vast amounts of valuable business data — and there is a need to enrich this, bring context to it, using the kinds of data that is being stored in Hadoop:

“There is a need to bring Enterprise data to Hadoop and Hadoop to Enterprise data. As a strategic enabler of today’s global economy, SAP is uniquely positioned to use our innovations to solve these complex challenges and we want to work along with the Hadoop community to achieve this.”

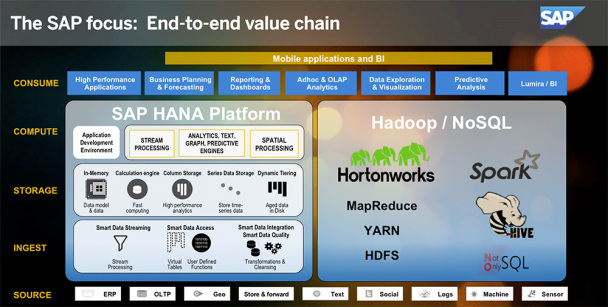

“SAP has long seen Hadoop and NoSQL as integral to a complete and unified Big Data solution and it has worked — and continues to work — to treat Hadoop as a valuable part of and extension to its solutions.”

It’s clear that in order for Hadoop to reach the next level of enterprise adoption, it will have to link more tightly with existing information systems.

Irfan outlined some of the key ways SAP works with Hadoop today:

- SAP Analytics solutions can directly — or though projects such as Spark, Impala, Yarn and Hive — access HDFS stores, allowing data wrangling, visualization, reporting, and predictive analysis on data held in Hadoop. For example, SAP’s data discovery tool SAP Lumira works with the Hortonworks Hadoop Sandbox

- SAP Data Services can interact with both the SAP HANA platform and with Hadoop though projects such as Pig and Hive to move, transform and gain insight from data.

- The SAP HANA platform can leverage the Hadoop ecosystem in a number of ways, from streaming data to virtualization and federation: pushing queries down into Hadoop and having the results sets returned, to kicking off MapReduce jobs. It allows for the relatively seamless integration of the power of HANA’s in-memory speed and powerful engines and libraries with the mass storage and distributed processing of Hadoop.

- SAP resells and supports Hortonworks and other Hadoop distributions.

But Irfan emphasized that this was just the start, with plans to:

- Drive a tighter integration of Hadoop and SAP products including the HANA Cloud Platform (HCP)

- Investigate synergies around information lifecycle management

- Support the enterprise compliance projects that will be essential to large scale adoption of Hadoop.

In particular, SAP is one of the founding members of a new Data Governance Initiative project called Apache Atlas:

“Apache Atlas proposes to provide governance capabilities in Hadoop that use both a prescriptive and forensic models enriched by business taxonomical metadata. Atlas, at its core, is designed to exchange metadata with other tools and processes within and outside of the Hadoop stack, thereby enabling platform-agnostic governance controls that effectively address compliance requirements.”

More information about SAP’s Hadoop initiatives and plans will be available at SAPPHIRE NOW in Orlando.