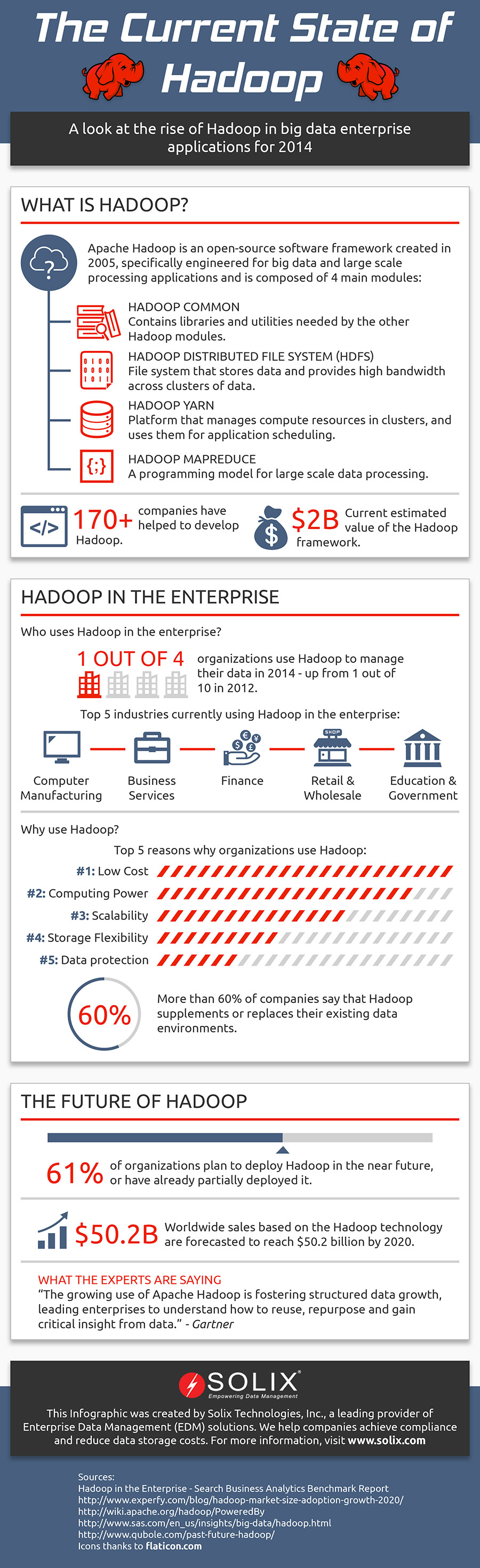

Since 2005, Hadoop has been the foundation for hundreds of big data companies, due to its open-sourced nature. Over 170 well-known companies have contributed to its development since launch, and the project is currently valued at over $2 billion.

Since 2005, Hadoop has been the foundation for hundreds of big data companies, due to its open-sourced nature. Over 170 well-known companies have contributed to its development since launch, and the project is currently valued at over $2 billion.

But what exactly is Hadoop, and why is it so important? In layman’s terms, Hadoop is a framework for creating and supporting big data and large scale processing applications – something that a traditional software isn’t able to do. The whole Hadoop framework relies on 4 main modules that work together:

-

Hadoop Common is like the SDK for the whole Hadoop framework, providing the necessary libraries and utilities needed by the other 3 modules.

-

Hadoop Distributed Files System (HDFS) is the file system that stores all of the data at high bandwidth, in clusters (think RAID).

-

Hadoop Yarn is the module that manages the computational resources, again in clusters, for application scheduling.

-

Finally, Hadoop Mapreduce is the programming model for creating the large scale and big data applications.

Hadoop is a very powerful framework for big data companies, and its overall use has been on the rise since its inception in 2005 – over 25% organizations currently use Hadoop to manage their data, up from 10% in 2012. Because Hadoop is open source and flexible to a variety of needs, it has been applied to almost every industry imaginable in the current big data boom – from finance, to retail to education and government.

Solix, a leading provider of Enterprise Data Management (EDM) solutions, has created an Infographic going more in-depth on Hadoop along with some interesting predictions – you can take a look at it below. Is your company using Hadoop to manage your data?