Finally, the dystopian and utopian views on AI meet in a modern day Clash of the Tech Titans. The Guardian calls it “the world’s nerdiest fight.” Tesla’s Elon Musk and Facebook’s Mark Zuckerberg are arguing over what, exactly, will happen in the relationship between artificial intelligence and humans in the future, and whether the advance of AI robots will require regulation to keep humans from suffering at the hands of our future AI overlords.

Okay, that’s not exactly the argument, but very close to it. On one hand, Musk believes government should preemptively impose regulations on AI because the technology comes with a “fundamental risk to the existence of civilization.” When Zuckerberg was doing a Facebook Live broadcast, a viewer asked him what he thinks about Musk’s viewpoint.

Zuckerberg said, “I have pretty strong opinions on this. I am optimistic. And I think people who are naysayers and try to drum up these doomsday scenarios – I just, I don’t understand it. It’s really negative and in some ways I actually think it is pretty irresponsible.”

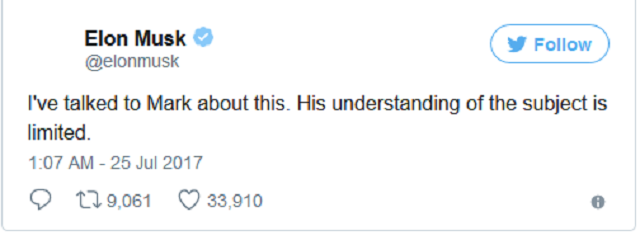

Musk responded on Twitter:

Given that Zuckerberg doesn’t really have any coding skills and Musk is actually knee-deep in the engineering aspects of his businesses, it’s safe to assume Musk probably has a better understanding of AI. But that doesn’t mean he knows the future. The debate does present a very pressing issue: Are the advantages we’re starting to see with AI part of a Trojan horse leading to a dystopian future?

Adaptive Learning

Adaptive learning is one area that’s very relevant to the question of where AI is leading us.

Adaptive learning software is a big part of the rapid changes happening in education, changes that affect how educational leaders make decisions. Basically, the software uses machine learning to determine where a student’s knowledge-level is in relation to subject matter, as well as how the student best processes information. Then, the software personalizes delivery of coursework based on the individual’s learning style, grasp of the material, and intelligence.

This is AI doing what teachers can’t do because they don’t have the time and resources. Educators have long known each individual has her own learning style and intelligence quotient. Cater education to the individual, and the individual could get better at grasping concepts. As conceptual, abstract thinking improves, intelligence improves.

Score one for Zuckerberg. If AI can help kids become more intelligent, they’ll grow up equipped to thrive in a world where AI is all around us. With adaptive learning software, all kids learn the same material. Teachers are there to help kids think analytically about the material and to promote creative thinking in order to solve problems.

Arizona State University is one institution that has already made inroads with adaptive learning software. There’s a lot at stake. Carl Hermanns is program coordinator for ASU’s Master of Education in Educational Leadership. He feels there’s a need for systemic change in education because “there are inequities in the education system that can block students from reaching their full potential.” Personalized learning software uses machine learning and tons of student data to affect education at the systemic level.

Can big data and AI help students realize their full potential? In an effort to answer this question, ASU partnered with Knewton, a company that specializes in adaptive learning software. In 2011, ASU employed Knewton’s software to help educate 5,000 students in a remedial math class. Before Knewton, the pass rate for the class was 66 percent; with Knewton, the pass rate rose to 75 percent. ASU also experienced a 47 percent decrease in student withdrawals from Knewton math courses.

Jose Ferreira is the founder and CEO of Knewton, Inc. He feels education is the proving ground for humanity’s relationship with AI and big data. That’s because the machine learning algorithms in adaptive learning software have access to rich data.

Each datapoint a student generates is connected to a right or wrong answer, so there’s very little irrelevant data like there is with big data from search engines and social media. Not only does the adaptive software learn about individual students’ learning patterns, over time it will also be able to develop a “knowledge graph.” According to Inside Higher Ed’s Steven Kolowich, the knowledge graph is “a comprehensive map of how different concepts are related to one another in the context of learning.”

In other words, the software will be able to help educators understand how students across the learning spectrum interact with concepts. This could potentially enable educators to better understand how to teach each student conceptual thinking. Today’s students could learn the type of conceptual thinking that will enable them to get the jobs that will not be dominated by robots in the future.

The Dystopian Side of Adaptive Learning

There’s a potentially sinister element to this. Knewton’s software can develop “psychometric profiles” of students. The profile will tell enquiring minds exactly what the student knows, how well they learn, and how they learn.

Knewton’s Jose Ferreira thinks a lot of about Gattaca, the dystopian sci-fi film starring Ethan Hawke, in which biometric information determines a person’s opportunities and standing in society. Knewton’s psychometric profiles could serve much the same purpose as Gattaca’s biometric info.

If the profile stamps the student as being weak in a conceptual area, future higher education programs and employers could use that information to exclude certain candidates. But what if the student is from an impoverished home? It’s clear that poverty negatively impacts educational outcomes for children. It’s not clear that adding AI in the classroom will help reduce the effects of poverty.

Score one (or more) for Musk. Psychometric profiles are flawed for multiple reasons. Conceptual thinking is tied in to abstraction which is tied in to creativity. AI can’t teach creativity, and if a student’s teacher doesn’t help the student learn creative analysis—the linking of seemingly unconnected concepts and the possibility of unorthodox, unexpected results—the student will be left behind.

Furthermore, the more adept AI becomes at helping students pass classes and get good grades, the more likely it may be that AI will replace teachers at lower levels. At the same time as this would displace tons of jobs, it would just teach children rote thinking. It would teach children how to think more like AI, and there isn’t always a right or wrong answer in the complex world of human relationships. It’s easy to envision Musk saying, “If we teach children how to be more like AI, then AI is the authority, and that’s something we don’t want.”

The Answer Is Not Black and White

Artificial intelligence will not make us slaves to robot overlords, nor will it introduce a utopian society where robots do all the work for us while governments equally distribute the income. Rather, AI could help a child get better at math as the child’s dad loses his factory job to a robot. We’ll need to mitigate the job displacement for millions of workers. But we’ll also need to ensure AI innovation can thrive and help people in the areas where it really can.