The New York Times reports that a respected psychology journal is due to publish a paper purporting to show “strong evidence” for extra-sensory perception:

The New York Times reports that a respected psychology journal is due to publish a paper purporting to show “strong evidence” for extra-sensory perception:

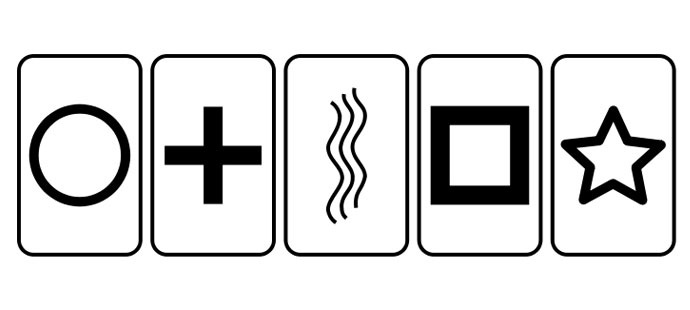

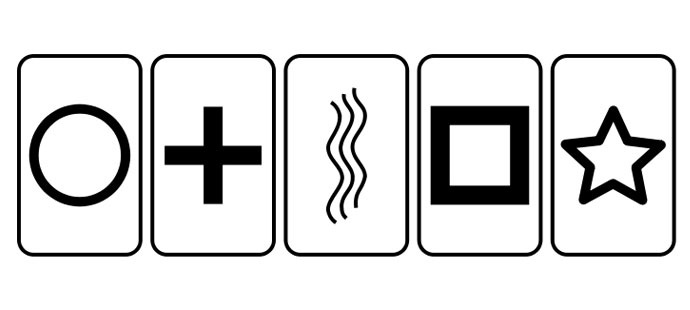

“A software program randomly posted a picture behind one curtain or the other — but only after the participant made a choice. Still, the participants beat chance, by 53 percent to 50 percent, at least when the photos being posted were erotic ones. They did not do better than chance on negative or neutral photos.”

Crucially, no “topflight statisticians” were part of the peer review. When I was at university, struggling to use a sophisticated statistics package on a mainframe as part of my econometrics degree, I dreamed of having a program that would just cruise through all the possible combinations of variables, and tell me which ones were correlated. That ability now exists, but the danger is that few people realize how much higher the bar must be set for a result to be deemed significant in such circumstances.

Given a large enough set of random numbers, you will always be able to find a “significant” relationship – especially if that’s exactly what you’re looking and hoping for.

To me, the experiment above sounds like it may have this problem – for example, if there were lots of different categories of photos, and the “significant” relationship was cherry-picked from the available results. And even if the level of significance has indeed been increased to take account of this, the result could still be random (if there’s a choice between changing everything we know about science and it being a fluke result, I’m going with the latter).

In science, thankfully, it’s easy for somebody else to repeat the experiment and validate the correlation, ideally before a respected journal makes a fool of itself (although I suspect they’re simply making a calculated bid for more publicity, and it’s working very successfully).

In business, it’s much harder to know if your “results” are valid. The same problem exists – people are looking for a certain type of result, and keep running the numbers until they find something that looks like a relationship: “Look! Customer satisfaction is correlated with their age!” . But it’s much harder to “rerun the experiment”, and businesses don’t always have/take the time to check their results.

Despite having worked in BI for over twenty years (or maybe because of it), I’m deeply distrustful of most corporate analytics. I believe business analytics is essential, but that it’s also essential to assume that any relationship you find is a working hypothesis, to be validated through further analysis (e.g. correlation is not causation), and expert discussion (as with peer-reviewed science papers, the best way to deal with potential analysis problems is greater transparency — social BI technologies like Streamwork are becoming increasingly important).