Predictive analytics is just a bunch of math, isn’t it? After all, algorithms in the form of matrix algebra, summations, integrals, multiplies and adds are the core of what predictive modeling algorithms do. Even rule-based approaches need math to compute how good the if-then-else rules are.

Predictive analytics is just a bunch of math, isn’t it? After all, algorithms in the form of matrix algebra, summations, integrals, multiplies and adds are the core of what predictive modeling algorithms do. Even rule-based approaches need math to compute how good the if-then-else rules are.

I was participating in a predictive analytics course recently and the question a participant asked at the end of two days of instruction was this: “it’s been a long time since I’ve had to do this kind of math and I’m a bit rusty. Is there a book that would help me learn the techniques without the math?”

The question about math was interesting. But do we need to know the math to build models well? Anyone can build a bad model, but to build a good model, don’t we need to know what the algorithms are doing? The answer, of course, depends on the role of the analyst. I contend, however, that for most predictive analytics projects, the answer is “no”.

Let’s consider building decision tree models. What options does one need to set to build good trees? Here is a short list of common knobs that can be set by most predictive analytics software packages: 1. Splitting metric (CART style trees, C5 style trees, CHAID style trees, etc.) 2. Terminal node minimum size 3. Parent node minimum size 4. Maximum tree depth 5. Pruning options (standard error, Chi-square test p-value threshold, etc.)

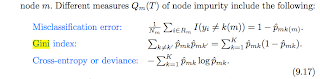

The most mathematical of these knobs is the splitting metric. CART-styled trees use the Gini Index, C5 trees use Entropy (information gain), and CHAID style trees use the chi-square test as the splitting criterion. A book I consider the best technical book on data mining and statistical learning methods, “The Elements of Statistical Learning”, has this description of the splitting criteria for decision trees, including the Gini Index and Entropy:

To a mathematician, these make sense. But without a mathematics background, these equations will be at best opaque and at worst incomprehensible. (And these are not very complicated. Technical textbooks and papers describing machine learning algorithms can be quite difficult even for more seasoned, but out-of-practice mathematicians to understand).

As someone with a mathematics background and a predictive modeler, I must say that the actual splitting equations almost never matter to me. Gini and Entropy often produce the same splits or at least similar splits. CHAID differs more, especially in how it creates multi-way splits. But even here, the biggest difference for me is not the math, but just that they use different tests for determining “good” splits

There are, however, very important reasons for someone on the team to understand the mathematics or at least the way these algorithms work qualitatively. First and foremost, understanding the algorithms helps us uncover why models go wrong. Models can be biased toward splitting on particular variables or even particular records. In some cases, it may appear that the models are performing well but in actuality they are brittle. Understanding the math can help remind us that this may happen and why.

The fact that linear regression uses a quadratic cost function tells us that outliers affect overall error disproportionately. Understanding how decision trees measure differences between the parent population and sub-populations informs us why a high-cardinality variable may be showing up at the top of our tree, and why additional penalties may be in order to reduce this bias. Seeing the computation of information gain (derived from Entropy) tells us that binary classification with a small target value proportion (such as having 5% 1s) often won’t generate any splits at all.

The answer to the question if predictive modelers need to know math is this: no they don’t need to understand the mathematical notation, but neither should they ignore the mathematics. Instead, we all need to understand the effects of the mathematics on the algorithms we use. “Those who ignore statistics are condemned to reinvent it,” warns Bradley Efron of Stanford University. The same applies to mathematics.

(Note: this post was first published in the March 2013 Edition of the Predictive Analytics Times)