Despite–or perhaps because of–the tremendous cost of data quality issues, most organizations are struggling to address them. We believe there are five primary reasons that they are failing:

Despite–or perhaps because of–the tremendous cost of data quality issues, most organizations are struggling to address them. We believe there are five primary reasons that they are failing:

- Our systems are more complex than ever before. Many companies now have more information than ever before. This requires greater integration. New regulations, M&A activity, globalization, and increasing customer demands collectively mean that IM challenges are increasingly–both in numbers and in terms of complexity.

- Silo-ed, short-term project delivery focus. Many projects are often funded at a departmental level. As such, they typically don’t account for the unexpected effects of how data will be used by others. Data flows among disparate systems–and the design of these connection points–must transcend strict project boundaries.

- Traditional development methods do not place appropraite focus on data management. Many projects are focused more on functionality and features than on information. The desire to build new functionality–for the sake of new functionality–often results in information being left by the wayside.

- DQ issues are often hidden and persistent. Lamentably, DQ issues can remian unnoticed for some time. Ironically, some end-users may suspect that the data in the systems on which they rely to make decisions are often inaccurate, incomplete, out-of-date, invalid, and/or inconsistent. This is often propagated to other systems as organizations increase connectivity. In the end, many organizations tend to underestimate the DQ issues in their systems.

- DQ is fit for purpose. Many DQ and IM professionals know all too well that it is difficult for end-users of downstream systems to improve the DQ of their systems. While the reasons vary, perhaps the biggest culprit is that the data is entered via customer-facing operational systems. Often these clerks do not have the same incentive to maintain high DQ; they are often focused on entering data quickly and without rejection by the system at the point of entry. Eventually, however, errors become apparent, as data is integrated, summarized, standardized, and used in another context. At this point, DQ issues begin to surface.

A comprehensive data quality program must be defined to meet these challenges.

Why is a New Competency Model Required?

Many organizations have struggled to meet these challenges for one fundamental reason: they fail to focus enterprise-wide nature of data management problems. They incorrectly see information as a technology or IT issue, rather than as a fundamental and core business activity. In many ways Information is the new accounting. Solutions required to address complex infrastructure and information issues can’t be tackled on a department-by-department basis.

While necessary, defining an enterprise-wide programme, on the other hand, is also very difficult. Building momentum for these initiatives takes a long period of time. Further, it can easily lead to approaches out-of-sync with business needs. Attempts to enforce architectural governance, for example, can quite easily become ineffectual or a “toothless watchdog” providing little value.

Organizations require an approach that can address all of the inherent challenges of

- a federated business model

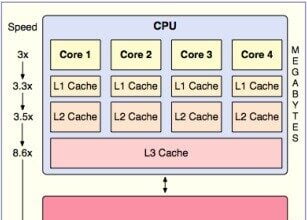

- an often complex technology architecture

Fundamentally, this approach should be both manageable, effective, and conducive to innovation. Admittedly, this is not an easy task. This is the rationale for MIKE2.0 and the need for a new competency of Information Development.