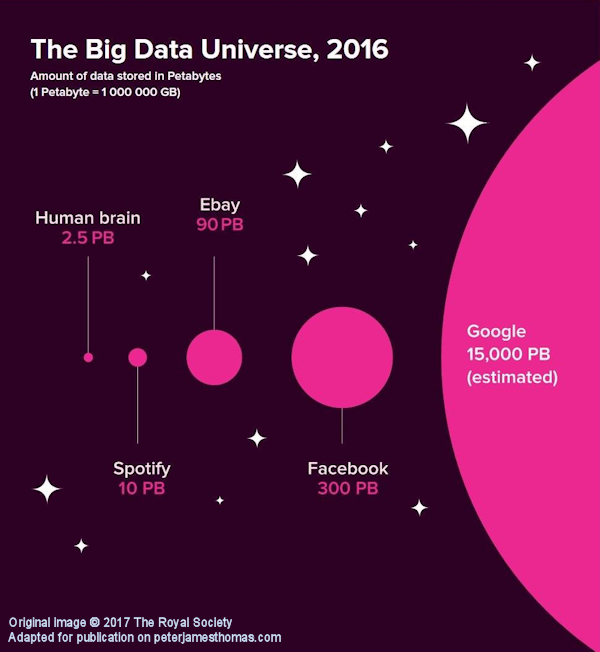

The above image is part of a much bigger infographic produced by The Royal Society about machine learning. You can view the whole image here.

I felt that this component was interesting in a stand-alone capacity.

The legend explains that a petabyte (Pb) is equal to a million gigabytes (Gb) [1], or 1 Pb = 106 Gb. A gigabyte itself is a billion bytes or 1 Gb = 109 bytes. Recalling how we multiply indices we can see that 1 Pb = 106 × 109 bytes = 106 + 9 bytes = 1015 bytes. 1015 also has a name, it’s called a quadrillion. Written out long hand:

The estimate of the amount of data held by Google is fifteen thousand petabytes, let’s write that out long hand as well:

That’s a lot of zeros. As is traditional with big numbers, let’s try to put this in context.

- The average size of a photo on an iPhone 7 is about 3.5 megabytes (1 Mb = 1,000,000 bytes), so Google could store about 4.3 trillion of such photos.

- Stepping it up a bit, the average size of a high-quality photo stored in CR2 format from a Canon EOS 5D Mark IV is ten times bigger at 35 Mb, so Google could store a mere 430 billion of these.

- A high definition (1080p) movie is on average around 6 Gb, so Google could store the equivalent of 2.5 billion movies.

- If Google employees felt that this resolution wasn’t doing it for them, they could upgrade to 150 million 4K movies at around 100 Gb each.

- If instead, they felt like reading, they could hold the equivalent of The Library of Congress print collections a mere 75 thousand times over [2].

- Rather than talking about bytes, 15,000 petametres is equivalent to about 1,600 light years and at this distance from us, we find Messier Object 47(M47), a star cluster which was first described an impressively long time ago in 1654.

- If instead, we consider 15,000 peta-miles, then this is around 2.5 million light years, which gets us all the way to our nearest neighbor, the Andromeda Galaxy [3].

The fastest that humankind has got anything bigger than a handful of sub-atomic particles to travel is the 17 kilometers per second (11 miles per second) at which Voyager 1 is currently speeding away from the Sun. At this speed, it would take the probe about 43 billion years to cover the 15,000 peta-miles to Andromeda. This is over three times longer than our best estimate of the current age of the Universe.

- Finally a more concrete example. If we consider a small cube, made of well concrete, and with dimensions of 1 cm in each direction, how big would a stack of 15,000 quadrillion of them be? Well, if arranged into a cube, each of the sides would be just under 25 km (15 and a bit miles) long. That’s a pretty big cube.

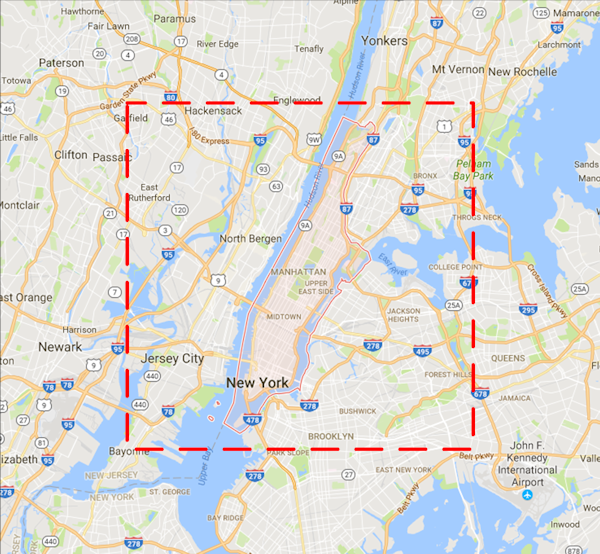

If the base was placed in the vicinity of New York City, it would comfortably cover Manhattan, plus quite a bit of Brooklyn and The Bronx, plus most of Jersey City. It would extend up to Hackensack in the North West and almost reach JFK in the South East. The top of the cube would plow through the Troposphere and get half way through the Stratosphere before topping out. It would vie with Mars’s Olympus Mons for the title of highest planetary structure in the Solar System [4].

It is probably safe to say that 15,000 Pb is an astronomical figure.

Google played a central role in the initial creation of the collection of technologies that we now use the term Big Data to describe The image at the beginning of this article perhaps explains why this was the case (and indeed why they continue to be at the forefront of developing newer and better ways of dealing with large data sets).

As a point of order, when people start talking about “big data”, it is worth recalling just how big “big data” really is.

Notes

| [1] | In line with The Royal Society, I’m going to ignore the fact that these definitions were originally all in powers of 2 not 10. |

| [2] | The size of The Library of Congress print collections seems to have become irretrievably connected with the figure 10 terabytes (10 × 1012 bytes) for some reason. No one knows precisely, but 200 Tb seems to be a more reasonable approximation. |

| [3] | Applying the unimpeachable logic of eminent pseudoscientist and numerologist Erich von Däniken, what might be passed over as a mere coincidence by lesser minds, instead presents incontrovertible proof that Google’s PageRank algorithm was produced with the assistance of extraterrestrial life; which, if you think about it, explains quite a lot. |

| [4] | Though I suspect not for long, unless we chose some material other than concrete. Then I’m not a materials scientist, so what do I know? |