The era of Big Data has arrived. Once dismissed as a buzzword, organizations are now recognizing the benefits of capturing and analyzing mountains of information to gain actionable insights that foster innovation and competitive advantage. To that end, open-source Hadoop has emerged as the go-to software solution in tackling Big Data.

The era of Big Data has arrived. Once dismissed as a buzzword, organizations are now recognizing the benefits of capturing and analyzing mountains of information to gain actionable insights that foster innovation and competitive advantage. To that end, open-source Hadoop has emerged as the go-to software solution in tackling Big Data.

The era of Big Data has arrived. Once dismissed as a buzzword, organizations are now recognizing the benefits of capturing and analyzing mountains of information to gain actionable insights that foster innovation and competitive advantage. To that end, open-source Hadoop has emerged as the go-to software solution in tackling Big Data. For organizations looking to adopt a Hadoop distribution, Robert D. Schneider—the author of Hadoop for Dummies—has just released an eBook entitled the Hadoop Buyer’s Guide. In the guide, sponsored by Ubuntu, the author explains the main capabilities that allow the Hadoop platform to perform and scale so well. What follows is a brief overview of these four key pillars of Hadoop performance and scalability.

The era of Big Data has arrived. Once dismissed as a buzzword, organizations are now recognizing the benefits of capturing and analyzing mountains of information to gain actionable insights that foster innovation and competitive advantage. To that end, open-source Hadoop has emerged as the go-to software solution in tackling Big Data. For organizations looking to adopt a Hadoop distribution, Robert D. Schneider—the author of Hadoop for Dummies—has just released an eBook entitled the Hadoop Buyer’s Guide. In the guide, sponsored by Ubuntu, the author explains the main capabilities that allow the Hadoop platform to perform and scale so well. What follows is a brief overview of these four key pillars of Hadoop performance and scalability.

1. Architectural Foundations – According to the author, there are four “critical architecture preconditions” that can have a positive impact on performance.

- Key components built with a systems language like C/C++: Explaining that the open source Apache Hadoop distribution is written in Java, Schneider points out a number of Java-related issues that can compromise performance, such as “unpredictability and latency of garbage collection.” Using C/C++, a language system that is consistent with almost all other enterprise-grade software, can eliminate these latency issues.

- Minimal software layers: Likening software layers to “moving parts,” Schneider explains that the more software layers a system has, the greater the potential to compromise performance and reliability. Minimizing software layers spares Hadoop from having to navigate separate layers such as the local Linux file system, the Java Virtual Machine, HBase Master and Region Server.

- A single environment platform: In the eBook Schneider states that a number of Hadoop implementations can’t handle additional workloads unless administrators create separate instances to accommodate those extra applications. To ensure better performance, a single environment platform—capable of handling all Big Data applications—is recommended.

- Leverage the elasticity and scalability of popular public cloud platforms: Schneider explains that running Hadoop only inside the enterprise firewall is not enough. To maximize performance the Hadoop distribution needs to run on widely adopted cloud environments such as Amazon Web Services and Google Compute Engine.

2. Streaming Writes – Hadoop implementations work with massive amounts of information and Schneider emphasizes that the loading and unloading of data must be done as efficiently as possible. This means avoiding batch or semi-streaming processes that are complex and cumbersome—especially when confronted with gigabyte and terabyte data volumes. A better solution, to paraphrase Schneider, is for the Hadoop implementation to expose a standard file interface that gives applications direct access to the cluster. Thus, application servers can write information directly into the Hadoop cluster where it is compressed and made instantly available for random read and write access. This speedy, uninterrupted flow of information permits real-time or near-real-time analysis on streaming data, a capability that is critical for rapid decision-making.

3. Scalability – As the author points out, IT organizations looking to fully capitalize on Big Data can sometimes end up wasting money on hardware and other resources that are unnecessary and will never be fully utilized. Or they can try to squeeze every drop they can from their existing and limited computing assets, never fully realizing the benefits of their Big Data. The flexibility and ease of scalability offered by the Hadoop platform allows organizations to more fully leverage all of their data without exceeding budgetary constraints.

4. Real-Time NoSQL – According to Schneider, “More enterprises than ever are relying on NoSQL-based solutions to drive critical business operations.” However, he notes that a number of NoSQL solutions exhibit wild fluctuations in response times, making them unsuitable for core business operations that rely on consistent low latency. A better choice, according to Schneider, is Apache HBase, a NoSQL solution that is built on top of Hadoop. Among the benefits of HBase are storage, real-time analytics and the added benefit of MapReduce operations utilizing Hadoop. Although HBase does have limitations relating to performance and dependability, the author lists a number of innovations capable of transforming HBase applications to “meet the stringent needs for most online applications and analytics.”

In identifying and discussing these four key pillars of Hadoop performance and scalability, Robert D. Schneider provides strong evidence as to why open source Hadoop is the dominant software solution for analyzing Big Data.

If you’re interested in learning how to select the right Hadoop platform for your business and best practices for successful implementations you can attend Robert’s upcoming webinar titled, Hadoop or Bust: Key Considerations for High Performance Analytics Platform and download the ebook here.

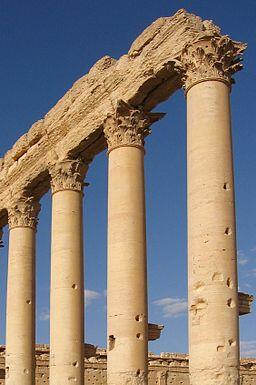

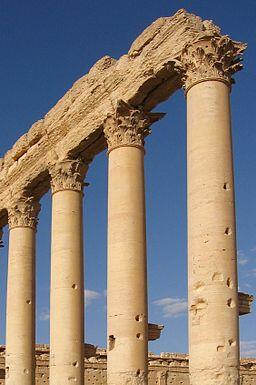

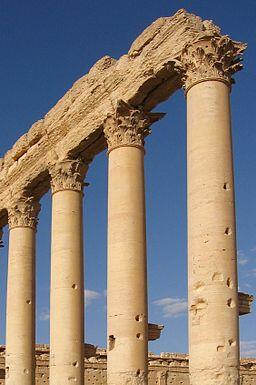

Image source: commons.wikimedia.com